Exploring OpenAI’s New Embeddings vs. Open Source Models

Written on

Introduction to OpenAI's New Models

In a recent update, OpenAI unveiled their latest embedding models, marking a significant improvement over their previous offerings. The new models, known as text-embedding-3-small and text-embedding-3-large, promise enhanced performance and more competitive pricing.

Before we delve deeper, if this topic piques your interest, don’t forget to give a thumbs up! Your support helps us create more content. Also, consider subscribing to my YouTube channel for more engaging discussions on this subject!

OpenAI Announcement Overview

For those familiar with the announcement, it outlines the release of two new models that aim to elevate text embedding capabilities. However, it's essential to recognize that OpenAI is not the sole player in the text embedding arena. As someone who often opts for open-source solutions due to budget constraints, I've decided to put these new OpenAI models to the test against some top-tier open-source alternatives.

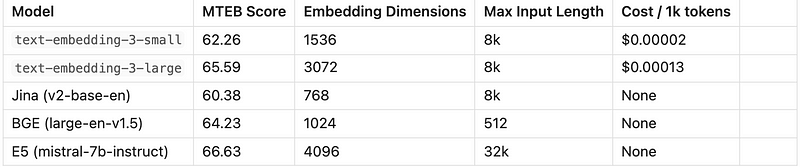

For this analysis, we’ll compare the following models:

- Jina

- BGE

- E5

The comparison will focus on several key metrics:

- MTEB Score (an embedding benchmark for English)

- Embedding Dimensions

- Maximum Input Length (in tokens)

- Cost per 1k tokens

Comparison Overview

Now that we have our criteria and contenders set, let’s jump into the comparison.

MTEB Score Insights

Upon reviewing the MTEB scores, we observe that OpenAI’s text-embedding-3-large ranks as the second-best model, achieving a score of 65.59. The E5 model tops the list with a score of 66.63, while Jina lags behind with a score of 60.38.

Winner: E5

Embedding Dimensions Analysis

In terms of embedding dimensions, the results align closely with the MTEB scores. The text-embedding-3-large model has 3072 dimensions, placing it second to the E5 model, which boasts 4096 dimensions. Jina, unfortunately, falls short with only 768 dimensions.

Winner: E5

Maximum Input Length Considerations

Interestingly, Jina distinguishes itself as the first open-source model to support a maximum input length of 8k tokens, making it comparable to OpenAI’s offerings. However, the E5 model excels in this category with a remarkable maximum input length of 32k tokens.

Winner: E5

Cost Evaluation

When it comes to cost, open-source models are entirely free to use. OpenAI’s pricing for their new embedding models is surprisingly reasonable, with Jina also offering an API. However, if you don't purchase tokens in bulk, OpenAI’s embeddings can actually be more affordable.

Summary of Findings

Based on the overall comparisons, the E5 model emerges as the top choice. However, it's crucial to consider usability; the e5-mistral-7b-instruct model may be powerful, but it may not be feasible to run on less powerful hardware like a MacBook M1 Air.

Recommendations:

- For a budget-conscious option: Choose OpenAI’s new embeddings.

- For large documents: Opt for Jina.

- For small documents: Consider BGE.

- If you have access to a powerful GPU: Go for E5.

Personal Reflections

While I have yet to utilize OpenAI’s embeddings due to the strong alternatives available in the open-source domain, I am intrigued by these new models given their enhanced cost-effectiveness and performance, particularly for commercial applications.

Final Thoughts:

What are your views on these new developments? I’m eager to hear your insights!

References

- OpenAI Announcement Post

- MTEB Leaderboard

For more on cutting-edge AI developments, check out this article on Google’s innovative text-to-video model:

- Google Raises The Text-to-Video Bar with Lumiere

The first video discusses the differences between OpenAI's embeddings and free, open-source alternatives.

The second video explores the best open alternative to OpenAI's embeddings, focusing on LangChain for Retrieval QA.

This article is published on Generative AI. Stay connected with us on LinkedIn and follow Zeniteq for the latest AI stories. Let’s shape the future of AI together!