Unlocking the Future of AI: Neuromorphic Computing Explained

Written on

Chapter 1: Understanding Neuromorphic Computing

The quest to replicate the human brain in a non-organic form could ultimately pave the way for the development of the first artificial general intelligence (AGI). The human brain is arguably the most intricate entity in the universe, comprising over 100 billion neurons, each interconnected with about 10,000 others, resulting in more than 100 trillion synaptic connections. This complexity allows the brain to perform a variety of functions, including language processing, reasoning, emotional responses, and creativity. Currently, no artificial intelligence (AI) can match the brain's general intelligence. Advanced AIs like GPT-4, LaMDA, and Sophia excel in specific tasks but do not attain the broad cognitive abilities of the human brain. To surpass human intelligence, the development of AGI is essential, and neuromorphic computing may hold the key.

Source: Student Circuit

Returning to Core Principles

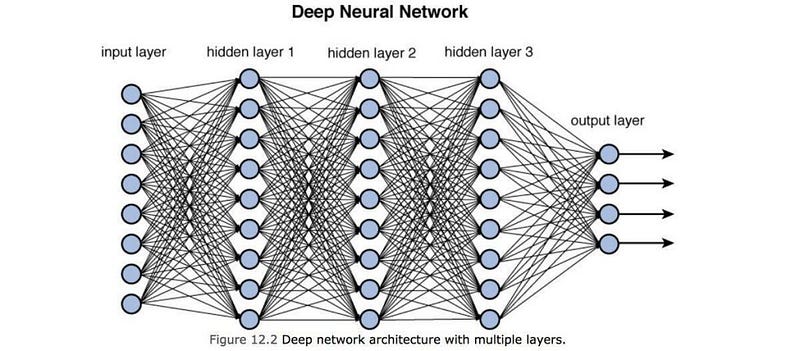

At the heart of today's sophisticated AI systems are deep artificial neural networks. These networks consist of billions of perceptrons—a simplified virtual model of biological neurons—resulting in trillions of adjustable parameters. Machine learning techniques train these networks using data, fine-tuning the parameters to produce outputs that closely align with desired results. Essentially, AI aims to simulate the synaptic connections present in the human brain through these neural networks.

A deep neural network is made up of interconnected layers of virtual artificial neurons. Each neuron's value is influenced by its weights, biases, and the values of the previous layer's neurons. These weights and biases are the parameters adjusted via machine learning techniques.

It's important to note that current AI is entirely software-based. The physical hardware that runs AI, primarily built on transistors (MOSFET), is vastly different from the biological synaptic architecture of the human brain. The goal of neuromorphic computing is to create hardware that more closely mimics the brain's synaptic structure by employing physical artificial neurons in place of virtual ones.

Chapter 2: The Path to Neuromorphic Computing

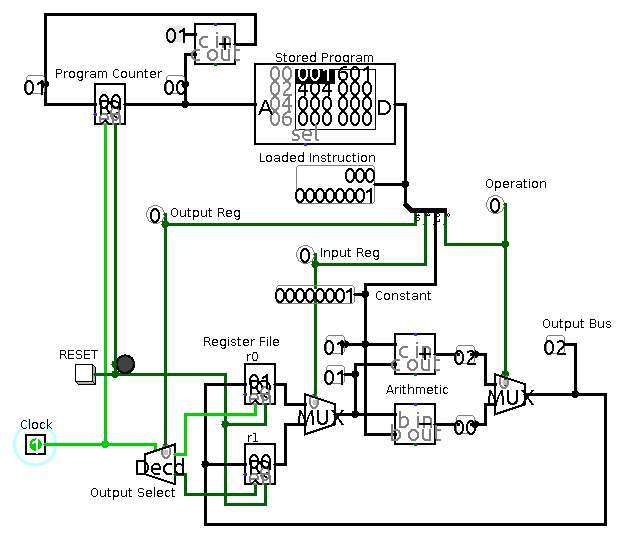

In traditional computers, various configurations of transistors create a multitude of logic gates, which then form the components of a CPU—the core of the computer's operation.

Standard CPUs operate in a defined instruction cycle (fetch-decode-execute), driven by an internal clock. This synchronous operation contrasts sharply with the brain's asynchronous nature, where stimuli can cause neurons to activate simultaneously across different regions. While it is possible to combine multiple CPUs to enhance processing, this still falls short of the brain's parallel processing capabilities.

Another distinction lies in memory storage. Each neuron in the brain retains information about its previous state, while modern computer architecture relies on RAM for data storage during processing. This separation creates latency and energy inefficiency, referred to as the von-Neumann bottleneck. Thus, contemporary computer systems struggle to match the brain's efficiency and parallel processing capabilities.

The first video, "Revolutionizing Computing: The Future of Neuromorphic Technology," delves into how neuromorphic computing aims to enhance computing architecture to better mimic the human brain.

A Potential Route to AGI

In 2018, Intel introduced Loihi, a neuromorphic chip featuring 131,072 artificial neurons and over 13 million synaptic connections. Loihi utilizes MOSFET technology to create spiking artificial neurons that mimic the properties of biological neurons, including synaptic weight and firing thresholds. The chip operates through impulses sent to specific artificial neurons, which only activate if their membrane potential exceeds a certain threshold, creating an asynchronous and dynamic system akin to biological neural networks.

Training such a system involves strengthening certain synaptic connections while weakening others, following the principle that "neurons that wire together fire together." While spiking neural networks have been simulated on traditional hardware, Loihi represents one of the first attempts to realize this architecture physically.

The second video, "Revolutionizing AI: The Dawn of Neuromorphic Computing and Neuron-Inspired Chips," explores how advancements in neuromorphic technology could transform AI capabilities.

Though Loihi has shown promising results, the field of spiking neural networks and neuromorphic computing is still in its infancy. As more neuromorphic chips like Loihi become commercially available, we may witness a surge in their efficiency and computing power, potentially following a trajectory similar to Moore's law. This could lead to the emergence of AI systems capable of performing a wider range of tasks, mirroring human-like cognitive abilities. As we explore the possibilities of neuromorphic computing, we are left to ponder its future. Will it usher in a new era of artificial intelligence, or remain a tantalizing vision just beyond our reach?

If you found this article insightful, consider showing your support with some claps and follow me for more engaging content. For references and further reading, click here.